The “build vs. buy” dilemma is a familiar challenge to software engineers, but with the rapid growth of AI and machine learning, it’s become even more nuanced. So, how should you approach this? We’ve got the perfect guest today to help you out!

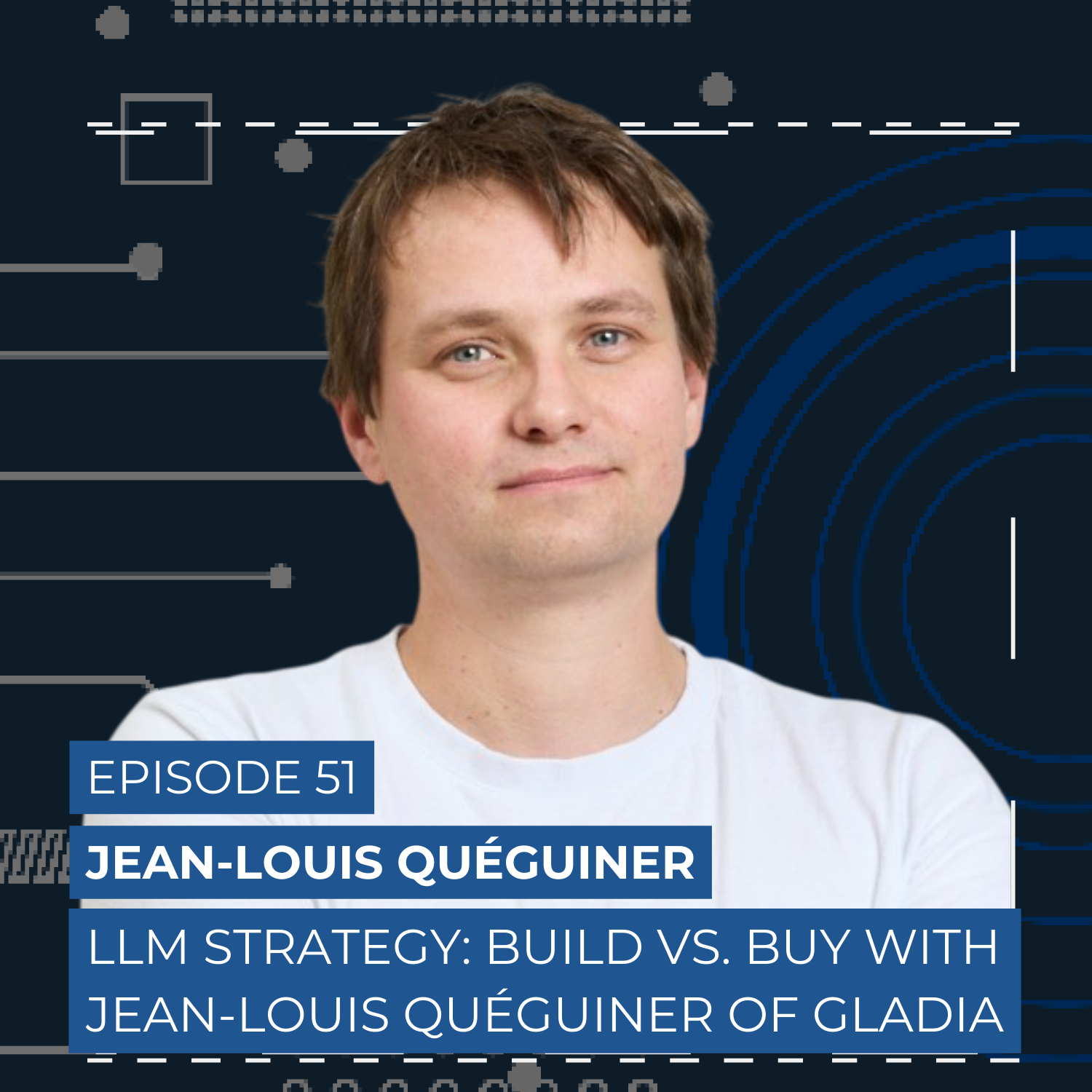

Meet Jean-Louis Quéguiner, Founder and CEO of Gladia. Gladia specializes in building audio infrastructure for automatic speech recognition. Their APIs provide asynchronous and live-streaming capabilities for voice recognition in multiple languages. Over the course of his career, Jean-Louis has worked as a data engineer, a CTO, and with AI and quantum computing services, making him the ideal person for this topic.

In this episode, we tackle the “build vs. buy” question for LLMs. Jean-Louis shares key considerations to have in mind when architecting your LLM-based application, from understanding your specific use case to evaluating trade-offs between building custom solutions to leveraging existing APIs.

Listen now, and you’ll walk away with a wealth of practical advice to help you make the right choice for your business.

Listen to the full episode on Spotify

Listen to the full episode on Apple Podcasts

Watch the video:

Key Insights are below

About Guest:

Name: Jean-Louis Quéguiner

What he does: Jean-Louis is the Founder & CEO of Gladia.

Company: Gladia

Where to find Jean-Louis: LinkedIn | X

Key Insights

⚡Voice is the biggest challenge for AI. Unlike text-based outputs, where small errors may go unnoticed, speech recognition has a much higher expectation of accuracy. Jean-Louis explains, “Nobody likes errors, and there’s nothing that looks more like a summary of ChatGPT than a summary of Claude. They all kind of look the same, even if they are not using exactly the same words, globally, means the same thing. The problem you have with speech recognition is nobody’s going to be tolerant with you if you make a mistake on your address, on the names, first name, last name, phone numbers, etc. So you need to be perfect. And perfection is extremely hard when it comes to AI, especially if you want to have extremely low latency.”

⚡Match the LLM model to your specific needs. There’s no one-size-that-fits-all LLM model. The best choice depends on the specific requirements of your application. Jean-Louis shares some key considerations to have in mind: “It’s always about those kind of questions that you need, which is what is your end goal? What is your use case? What are the conditions the system is running? What’s quality you have able to accept? Define what is acceptable as a metric, and then you’re going to be able to choose your model.”

⚡Keep testing different LLMs to see what works best. There’s no substitute for real-world data, so you always need to keep testing and trying out different LLM models and versions. Jean-Louis says, “I think it’s hard to say now that you have a proper methodology. I think the only thing is to test, try, look at the leaderboards again, amazing LLM leaderboards and try to make these metrics that give a sense of what is good quality. But I think in the end […] it all comes to being in the real world situation. Try x, y, z and see how people are using it.”

Episode Highlights

Focus on what matters most; buy what’s already solved

Your time and resources are too valuable to waste. Instead of reinventing the wheel, focus on solving the unique challenges that differentiate your business. If there’s already a solution out there for something you need out there, buy it!

Jean-Louis explains it perfectly, “You’re smart, and if I need to take your smart brain for something, it is to solve my own problem, which is my business problem. So everything that was about billing, invoicing wasn’t done internally; we just bought from a provider. It’s actually called Hyperline. The only things like authentication. Yes, we could do our own authentication, but I don’t want you to spend time on that. I want you to spend time on the real problem we have to solve. So all the questions were, ‘How much time are we going to spend? What is the ROI that we’re going to have, and what is the differentiator we are bringing to market by doing everything internally?’ And most of the thing was focus on the problem and don’t focus on the things that are already being solved by the industry and just pay for it and get the go-to-market motion that you need.”

Define what ‘good enough’ means for you

AI is never truly done because perfection isn’t the goal. Instead of striving for perfection, AI projects should strive for a practical level of effectiveness that meets your needs. ‘Good enough’ should be defined in advance to avoid unnecessary costs and effort.

Jean-Louis explains, “You don’t want the system to be 100% perfect, especially with GenAI when you want to have a bit of kind of craziness and creativity and stuff. So the question is what done means and I think that’s probably happening at the end. So perfect. That’s probably one of the big topics to address. Define the definition of done. Define what is good enough. Otherwise it’s going to be an endless spend of money, endless spend of engineering, endless spend of project management. Define what is good enough.”

Voice AI is shaping the future of interactions

Spoken language is the most natural and compact way to communicate. It cuts through complexity in seconds and makes interactions more efficient than ever. As Jean-Louis nicely puts it, “We don’t need to write anymore. So that’s the big shift in humanity, in my opinion. Voice is the most compact form of knowledge. And I will finish with that. If you think about ChatGPT. ChatGPT is a podcast. You have someone that doesn’t know anything about the sector or about a problem that has the experts, and the expert is giving answers, and podcast is the highest form of intelligence and sharing in humanity. And LLMs are just replicating that. It’s your personal podcasters. So, I think voice is the key to humanity knowledge. And that’s what makes us human and not machines is because we have ideas and we are able to give the flow in a very compact way in a very short amount of time.”